Chronic Disease Research Group

Hennepin Healthcare Research Institute

701 Park Ave.

Suite S2.100

Minneapolis, MN 55415

Email: cdrg@cdrg.org

Tel: 612-873-6200

Mon-Fri: 8:00 AM - 4:30 PM CT

__________________________________________________________________________________

Nicholas Wood, PhD, Biostatistician

June 6, 2025

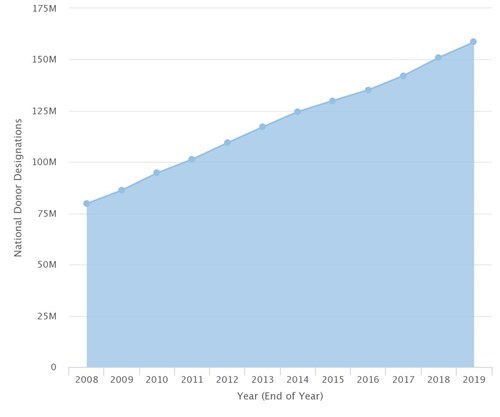

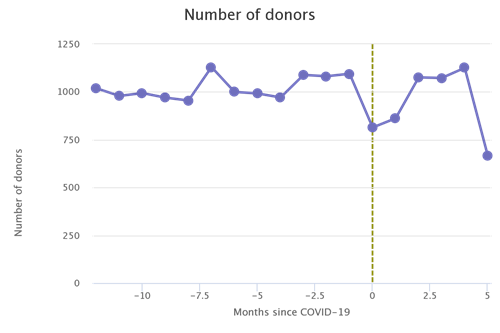

In early 2024, the Organ Procurement and Transplantation Network (OPTN) Expeditious Task Force announced its “bold goal of achieving 60,000 successful, lifesaving deceased donor transplants annually in the U.S. by the end of 2026,” which “represents a 58% increase in deceased donor transplants over the next three years.”1 Squarely halfway through that 3-year period, now is a fitting time to assess the Expeditious Task Force’s goal to see where things stand. We will do this using the Donation and Transplant System Explorer provided by the Scientific Registry of Transplant Recipients (SRTR).2,3 Using this tool, we can look at the yearly number of deceased donor transplants in the United States over the past decade.

Figure 1. Yearly counts of the number of deceased donor transplant recipient registrations forms (ie, deceased donor transplants). Source: https://www.srtr.org/tools/donation-and-transplant-system-explorer/2

Figure 1 is read as follows. From left to right we are moving forward in time from 2016 to the present (as of this writing, approximately May 20254). Each day the number of deceased donor transplants in the previous year is calculated and plotted in a blue line. For example, the value of the blue line on January 1, 2024, provides the number of deceased donor transplants in the year 2023—just shy of 40,000. Similarly, the value of the blue line on May 1, 2025, provides the number of deceased donor transplants between May 1, 2024, and May 1, 2025—just shy of 42,000.

In the first 17 months since the Expeditious Task Force announced its goal, the number of yearly deceased donor transplants increased by 2,000. To achieve the goal of 60,000, the number of yearly deceased donor transplants would need to increase by about 18,000 over the next 19 months. For context, this is approximately the same absolute growth that occurred over the past decade. In other words, at this point it is safe to conclude that the Expeditious Task Force will not achieve its goal.5

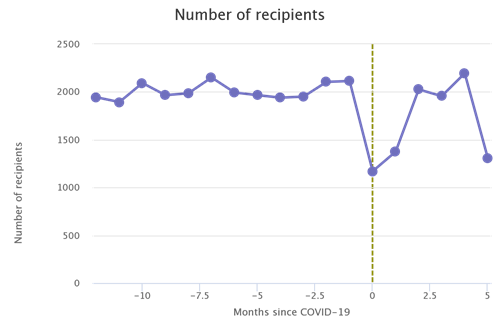

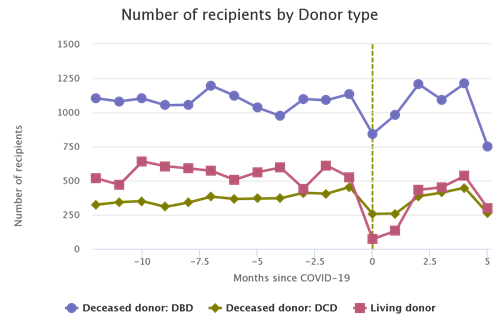

While it was a noble and admittedly “bold goal,” there are aspects of transplant volume that are out of the control of the Expeditious Task Force. To see this, consider the following. Recently, something has been happening in the United States that has not happened for the past 10 years—a rapid decrease in the number of drug overdose deaths6 (Figures 2 and 3; also Figure 1a from the National Vital Statistics System’s report on Provisional Drug Overdose Death Counts7).

This is undoubtedly a good thing, but what are its implications for transplantation? Historically, the third or fourth most prevalent mechanism of death in the deceased donor population has been drug intoxication. If the overall number of drug overdose deaths is decreasing in the United States, then we would expect the number of deceased donors resulting from those deaths to similarly decrease, and that is exactly what we see.

Figure 2. Yearly counts of deceased donors with a mechanism of death of drug intoxication.2

Figure 3. Yearly number of drug overdose deaths (blue) and number of donors resulting from drug intoxication (red). Sources: CDC data: https://www.cdc.gov/nchs/nvss/vsrr/drug-overdose-data.htm,7 accessed June 2, 2025; SRTR data: https://www.srtr.org/tools/donation-and-transplant-system-explorer/2

This is likely just one of myriad factors that influence the overall number of deceased donor transplants performed in the United States. The Expeditious Task Force cannot directly control transplant volume, so it would be hard to give them credit or blame regardless of whether their goal of 60,000 successful, lifesaving annual transplants by the end of 2026 is achieved. For that reason, it is my opinion that it was an unrealistic goal, not because 60,000 was unrealistically high, but because the target by which to judge the success of the Task Force was tied to something not directly under their control.

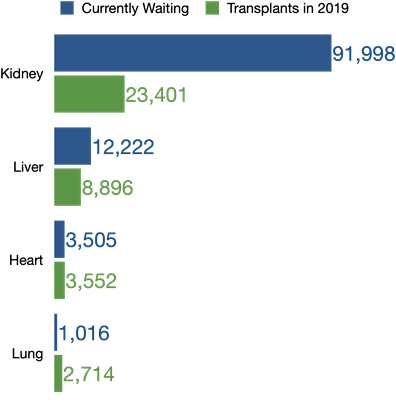

It must be acknowledged that the Task Force was also working on implementing and testing allocation variances to increase system efficiency and begin to address the current high rate of organ non-use. We are currently seeing 29% non-use of kidneys that are procured for the purpose of transplant. Addressing this non-use alone could result in up to 9,000 more deceased donor kidney transplants annually (Figure 4).

Figure 4. Annual number of deceased donor kidneys procured for transplant but not transplanted.2

Finally, more efficient organ offer processes might reduce transplant program burden in fielding organ offers that may never actually reach the program because the organ being offered is accepted earlier in the process. For example, we currently observe an average of over 10 programs notified of a deceased donor kidney when, in reality, the program never received a primary offer for one of their candidates (Figure 5). Implementing reasonable limits on how many programs should be notified based on the donor’s characteristics may reduce program burden in fielding organ offers and is more within the control of the Expeditious Task Force. Decreasing unnecessary offers reduces workload and likely increases the chance that a program would accept the offers they receive.

Figure 5. Annualized average number of excess programs notified of a deceased donor kidney offer. An excess program is defined as a program that received an offer for the kidney but was never made primary for any of its candidates.2

In conclusion, the Expeditious Task Force’s bold goal of 60,000 deceased donor transplants (successful, lifesaving, or otherwise) appears unattainable. We as a transplant community must continue to work together to identify ways to increase utilization of existing deceased donor organs and continue efforts to expand the deceased donor pool, until such time as we see deaths on the waiting list reduced to a minimum. The dedicated people in this field, including those on the Expeditious Task Force, can continue to identify ways to make this a reality.

References and Footnotes

Did you enjoy this post? Follow us for more!

__________________________________________________________________________________

May 1, 2025

In 2022, the Scientific Registry of Transplant Recipients (SRTR) hosted a consensus conference—sponsored by the Health Resources and Services Administration (HRSA)—that redefined what patient-centered care looks like in the organ transplant system. Instead of being sidelined, transplant recipients, living donors, and family members were fully integrated into every stage of the conference. They participated as speakers, moderators, and panelists, offering valuable lived experiences that influenced discussions on how to improve transplant metrics and outcomes. Through preconference training that reduced knowledge gaps, patients were prepared to contribute confidently and engage in high-level policy discussions. Their involvement sparked significant changes, such as ending the use of the term "discard" for unused donor organs—emphasizing how language shapes perception and practice in medicine.

By treating patients not as customers, but as co-investors in the health care system, the SRTR team created a new model for how medical conferences should be run. Patients’ voices were treated as equal to those of professionals, thus helping shape a more humane and data-informed approach to transplant care. Some key takeaways were the need for long-term outcome tracking, more patient-centered metrics beyond just mortality, and tools that promote shared decision-making between patients and providers. This conference set a new standard for health care conference best practices and demonstrated that real transformation in transplant care is possible when patients are not only heard, but also actively involved in driving change.

This AI-assisted summary is based on the following publication: Davis E Jr, Jones J, McCarthy M, McKee K, Reid M, Rogers D, Schmidt R, Tabatabai A, Hart A. The impact of engaging transplant recipients in health care policy deliberations. Prog Transplant. 2025 Mar;35(1):45-47. doi: 10.1177/15269248241304792.

Did you enjoy this post? Follow us for more!

__________________________________________________________________________________

Jonathan Miller, PhD, Biostatistician

February 9, 2024

In the previous post, introducing our work at the Scientific Registry of Transplant Recipients (SRTR) on the use of race in medical algorithms, we proposed that algorithms should be examined individually for whether or not to include adjustment for race. We offered the case of the kidney donor risk index (KDRI) as an example of an algorithm that should not include adjustment for race. In this post, we offer an example of an algorithm that we believe should include adjustment for race.

Case 2 – Include Race: CALC Donation Rates

The KDRI is an algorithm that tries to predict risk in the kidneys of an individual donor. Compare this to an algorithm that tries to identify high- and low-performing organ procurement organizations (OPOs). The thing being measured in an OPO evaluation algorithm is an organization—a group of people serving a population. While algorithms that measure individuals may be suspect because race is not biological, some of the social disparities experienced by some races may be very important to account for when the thing being measured is an organization.

Well-known historical examples like the Tuskegee Syphilis Study form a ground for reasonable mistrust of health care systems among minority racial groups in the United States. Organ donation is an act based on altruism and on trust. The example of the KDRI is one of many reasons that mistrust may continue. Labeling organs from Black donors systematically as “higher risk” can disrupt the trust that their organs will actually be transplanted if they choose to donate.

A current algorithm used to evaluate OPOs is the ratio of actual donors to potential donors.1 Potential donors, in this algorithm, are defined as deaths in the OPO’s service area that are cause of death, age, and location consistent with transplant (CALC deaths). A controversial question has been whether this ratio should be adjusted for race. One side has proposed that race should not be expected to affect an individual’s altruism in the decision to donate. We have proposed that while altruism is universal, trust in the health care system is very different across races and could cause very different average donation rates between races.

We studied whether to adjust for race in the OPO algorithm by calculating the ratio both with and without adjustment for race.2 We first found that there are differences in the national average rate of donation across races. While the ratio of actual donors to potential donors was 12.1% among White patients, the ratio was 10.0% among Black patients. If the OPO algorithm is not race adjusted, it means that in order to be in a high-performing tier, OPOs would need to perform better among Black potential donors than the national average among Black donors, but would not necessarily need to perform better among White potential donors than the national average among White donors.

In fact, when we compared tier ratings of OPOs between ratios that did and did not adjust for race, we found that eight OPOs would change evaluation tier. Particularly notable are two OPOs, which have 39.1% and 44.7% non-White potential donors, respectively. Both of these OPOs would change from tier 3 to tier 2 if the ratio were race adjusted and would therefore not be at risk of decertification without the chance to recompete for their service area. Actually, both of these OPOs also perform better than the national average among non-White donors. We interpret this to mean that even though these OPOs perform better than the national average among non-White potential donors, they are at risk for decertification because they have a high proportion of non-White donors, while an OPO that has a high proportion of White donors may not have to perform better than the national average among their donors to be recertified. Therefore, we believe the OPO ratios are an algorithm that should be adjusted for race.

Conclusions

There is no quick fix for bias in medical algorithms. It may be attractive to say we should never adjust for race in algorithms, but this can be just as risky as always including race. Each algorithm should be considered on its own. The algorithm should be tried with and without adjustment for race. If including race makes a difference in the outcome of the algorithm, we should consider why. When considering why race shows up statistically in algorithms, it is important to explore biological as well as social reasons. Race is not biological and shouldn’t be used as a proxy for biological measures in algorithms. But failing to explore biological explanations would mean ignoring explanations like the APOL1 gene, which could be used in other contexts to improve outcomes for Black kidney patients. Similarly, failing to explore social explanations could mean failing to recognize that some organizations are actually performing quite well among the patients they serve.

References

Did you enjoy this post? Follow us for more!

__________________________________________________________________________________

Jonathan Miller, PhD, Biostatistician

December 6, 2023

Algorithms have an important place in making public health and medical decisions. All algorithms require assumptions, and assumptions allow bias. Racial bias in modern statistics began as early as Francis Galton, a British polymath who began the eugenics movement in the 19th century. Galton thought it possible to measure culture and mathematically prove that Western European culture was superior to all other cultures.1 Many prominent statisticians who followed Galton also believed in Social Darwinism or Eugenics.2,3

Today, assumptions are being brought to light and tested. A well-known example of this is the equation for estimating patients’ kidney function. This equation originally included Black or African American race as one of the variables for estimating kidney function. The equation’s developers tried to explain the statistical relationship of Black race with kidney function with the erroneous assumption that Black patients had higher creatinine values because of having more muscle mass.4 This equation and assumption led to disparities, like Black patients having to have worse kidney function before they could start banking time on the kidney transplant waiting list than patients of other races. Uncovering the bias in the estimate of kidney function opened a wave of examinations of other equations used in transplant. The issue of including race in an equation has become contentious, and some say it should never be included in medical or public health equations.

In my work for the Scientific Registry of Transplant Recipients, we have carefully considered the inclusion of race in a number of equations. We use a framework proposed by the National Quality Forum, specifically:

When there is a conceptual relationship (i.e., logical rationale or theory) between sociodemographic factors and outcomes or processes of care and empirical evidence (e.g., statistical analysis) that sociodemographic factors affect an outcome or process of care reflected in a performance measure:

AND

This framework has led us to conclude that in some cases race should continue to be included in the algorithm and in some cases it should not. Over this and the next blog post, we will provide an example of each case.

Case 1 – Stop Including Race: The Kidney Donor Risk Index

The kidney donor risk index (KDRI) is an equation used to estimate the risk of graft failure from a particular kidney donor. The KDRI equation was modeled in 2009 and includes Black race as a predictor.6 KDRI is used in the policies that determine how potential deceased kidney donors are matched to recipients. The KDRI statistical model identified Black donors as having higher risk for graft failure. While the Black race predictor met the criteria of having an empirical relationship with graft failure, continuing to include it as a predictor also requires having an appropriate conceptual relationship. Race is social, not biological, which makes including race in equations for individual risk immediately suspect.

Our current best guess for why Black race statistically predicts kidney failure in donors or in patients is the higher probability of carrying a certain version of the APOL1 gene that protects against sleeping sickness.7 Genetic explanations have historically been misused or erroneous when used to explain racial differences, especially when trying to establish foolish ideas like universal superiority of fitness. Universal superiority of fitness could only exist if all environments were identical and never changed. Genes move in response to risks in specific environments, and a gene that protects from one risk might increase another risk. In the case of APOL1, there are versions of the gene that only occur in people with recent African ancestry that protected their carriers against dying from sleeping sickness. Unfortunately, if a person carries this version on both of their chromosomes, it also increases the risk of having kidney failure later in life.

So, we currently believe that statistical risk of kidney failure in Black donors is at least partly explained by a higher chance of carrying two higher risk APOL1 versions. The current best estimates are that about 13% of Black donors or patients may carry two higher risk APOL1 versions.7 Thus, a statistical model that uses an average risk for Black race would overestimate the risk of kidney failure in 87% of Black donors, and underestimate the risk in those 13% of donors who carry two higher risk APOL1 versions. It would be better to replace the Black race predictor with APOL1 risk as a predictor. But APOL1 versions are not data currently collected for kidney donors.

Given the lack of data to identify and measure APOL1 risk, decision-makers are left with the problem of whether it is better to possibly overestimate the risk of kidney failure in 87% of potential Black kidney donors or underestimate the risk of kidney failure in 13% of potential Black kidney donors.

There are about 140,000 candidates listed for kidney transplant and about 26,000 kidneys transplanted in a year.8 The percent of kidneys recovered from donors but not transplanted is now above 24%, and a higher KDRI increases the chance that the donor’s kidney will not be used.8 However, there are signs that getting a transplant, even with a higher KDRI kidney, is better than other treatments like dialysis.9 And with a higher chance of closer common ancestry, it may be easier for Black transplant candidates to find matches on genes like those of the human leukocyte antigens that reduce the risk of failure after transplant from Black donors. These considerations seem to give weight to removing the Black race predictor from KDRI, even if it may underestimate the risk of kidney failure in 13% of Black potential donors.

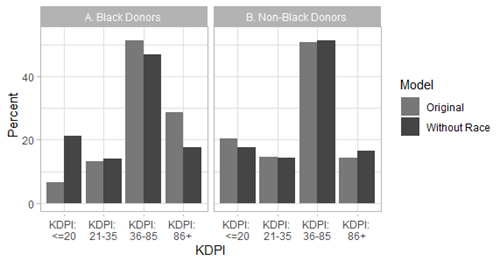

In fact, we modeled what would have happened if Black race had never been included in KDRI. We found that the percent of potential Black donors in the “highest risk” KDRI category (85th percentile and above) would drop from 28.7% to 17.7% and the percent of potential Black donors in the “lowest risk” KDRI category (20th percentile and below) would rise from 6.6% to 21.4% (Figure 1). This reclassification of kidneys from Black donors from “higher risk” to “lower risk” could decrease the chance that kidneys from Black donors are recovered but not used and could help reduce disparities in kidney transplant. Therefore, KDRI is an algorithm from which we would recommend removing the Black race predictor.

Figure 1: Percent of Black and non-Black donors by KDRI category with or without the race coefficient.

References

Did you enjoy this post? Follow us for more!

__________________________________________________________________________________

Cory Schaffhausen, PhD, Human Centered Design Engineer

October 2, 2023

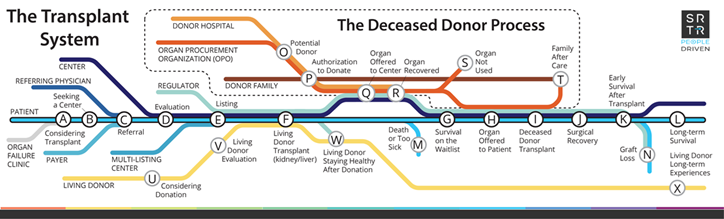

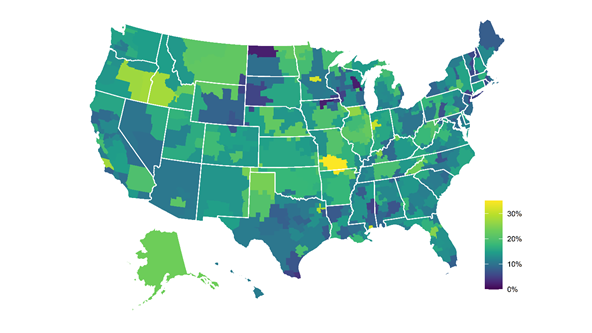

Organ transplant is the best therapeutic option to improve quality of life and mortality for many patients with organ failure. Unfortunately, over 105,000 patients across the United States are now waiting for a kidney or liver transplant. Due to an extreme organ shortage, more than 10,000 patients die or become too sick for a transplant each year while on the waiting list. Despite numerous research interventions, rates of waitlisting and transplant have not increased in the United States in the past 2 decades. Organ procurement organizations (OPOs) are a primary driver of the deceased organ donation process and provide local service areas with education and family support (Figure 1). OPOs are a critical resource for disseminating interventions through local community relationships. However, OPOs face a barrier to continuously improve and scale interventions, because the national transplant system does not provide a feedback loop to identify effective interventions in individual communities.

Figure 1: Schematic diagram of the organ transplant system and the role of organ procurement organizations in the deceased organ donation process (red line).

Racial disparities persist at each stage of the transplant process. Populations that are overrepresented in rates of organ failure are underrepresented in rates of organ transplant and organ donation.

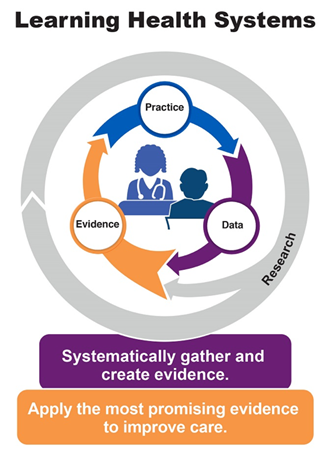

A learning health system (LHS) approach offers a sustainable method to embed engagement and long-term monitoring into the transplant system. The Institute of Medicine defined LHS as “A system in which science, informatics, incentives, and culture are aligned for continuous improvement and innovation, with best practices seamlessly embedded in the care process, patients and families are active participants in all elements, and new knowledge is captured as an integral by-product of the care experience.” (Best Care at Lower Cost, 2012)

For the current organ donation system, new knowledge is captured for donation rates and waitlist and transplant outcomes; however, it is not fully put to use improving organ donation equity. An LHS system seeks to rapidly and continuously feed data into practice that is informed by stakeholder engagement (Figure 2). The organ donation process has the potential to benefit from the LHS model because OPOs collect wide-ranging organ donation data. These data are analyzed by the Scientific Registry of Transplant Recipients (SRTR), a federal government contract administered by the Chronic Disease Research Group (CDRG). SRTR analyzes and reports these data to monitor organ donation outcomes. Unfortunately, current analyses are insufficient to monitor equity in organ donation. This gap limits the ability to identify 1) long-term effectiveness of OPO-level interventions beyond the duration of research follow-up and 2) top-performing OPOs nationally to disseminate best practices for engaging underserved populations with low rates of organ donation.

Figure 2: The Agency for Healthcare Research and Quality’s model for LHS.1

Researchers at CDRG were recently awarded a National Institutes of Health research grant to study methods to use national registry data from SRTR to develop equity-focused tools modeled after an LHS approach. The project is a collaboration with LifeSource, the OPO that serves Minnesota, South Dakota, North Dakota, and parts of Wisconsin. The grant will include many related goals, including 1) significant community outreach to develop tailored and culturally competent materials to improve community awareness of organ donation and 2) using SRTR and linked data, creation of organ donation data tools that will describe organ donation at county, race, and ethnicity levels to monitor disparities in organ donation. The development of data tools will include broad stakeholder engagement from OPO professionals and transplant professionals, as well as community groups. While the work has only recently begun, the 5-year grant will explore how to support OPOs as an LHS using data such as:

• disaggregated race and ethnicity data;

• county-level population demographic information and expected donation rates for race and ethnicity groups;

• county-level estimated donor registration rates for race and ethnicity groups;

• organ donation time trends for populations experiencing health disparities; and

• living donation disaggregated data by race and ethnicity.

The data can be a resource for OPOs and the public to understand organ donation equity, which is critical for informing community engagement and potential interventions to improve organ donation outcomes and equity.

References

1. From About Learning Health Systems. Content last reviewed May 2019. Agency for Healthcare Research and Quality, Rockville, MD. https://www.ahrq.gov/learning-health-systems/about.html

Did you enjoy this post? Follow us for more!

__________________________________________________________________________________

Grace Lyden, PhD, Biostatistician

April 24, 2023

In the United States, there are simply not enough deceased donor organs to transplant into every patient with end-stage organ failure at the time of diagnosis. Instead, each patient must join a national waiting list for the organ they need and wait to receive an offer of a suitable organ for them. So, an important question to answer for patients and their care teams is “How long does it take to get a transplant?”

The waiting time to receive a suitable organ offer depends on many factors. Some patients are easier to match, for example, based on their blood type and other immunologic sensitivities. Depending on the organ, allocation policy might prioritize patients who have been on the waiting list for longer, patients who are more in need of transplant, or patients more likely to benefit from transplant. That is, a patient might have to wait until they are “sick enough” to receive an offer, but not “too sick.”

Other patients, who might have waited longer or need the organ more, also need to be considered. So, a particular patient’s waiting time depends on not only that particular patient’s need, but also who else is on the waiting list at the same time, how long they have waited, and how sick they are.

All of these factors make estimating the waiting time for transplant a difficult problem. At the same time, it is one of the most important statistical problems in transplantation. This information helps patients and care teams decide whether to pursue alternatives to deceased donor transplant, like living donor transplant (for kidney and liver) or ventricular assist devices (for heart). Policymakers also need waiting time estimates to understand the impact of policy changes and evaluate equity in transplantation.

As a transplant statistician, I think about waiting time a lot. And when I am working with members of the transplant community who want to know about waiting time, my first step is to identify what they are really asking. It is not enough to say, “How long does it take to get a transplant?” There are at least three underlying questions someone might be asking when they say this, which have different answers that are estimated by different statistical methods.

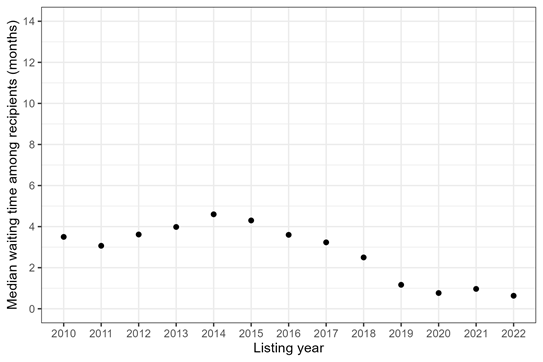

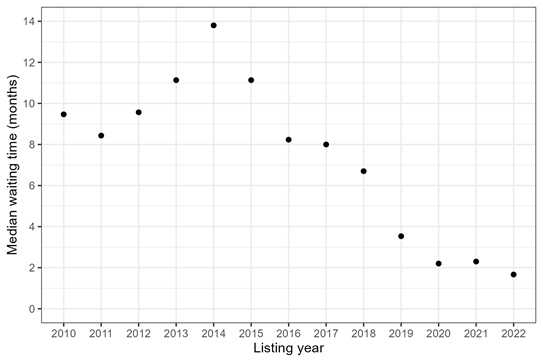

The first underlying question someone might be asking is How long do transplant recipients wait for transplant? This can be important—for example, when we want to understand how the transplant recipient population is changing over time. It’s also very easy to answer. You simply look at all of the people who received a transplant during a particular time frame and calculate how long they waited. Figure 1 shows median waiting time among adult heart recipients who listed in 2010-2022. A “median” means that 50% of recipients had a waiting time less than or equal to that number.

Figure 1: Median waiting time for heart transplant among adult recipients, by listing year.

The problem with this approach is that it ignores all of the patients who were on the waiting list and did not make it to transplant. Some patients die while waiting for heart transplant; others are removed because their condition deteriorates (or improves) and they are no longer a good candidate for transplant. In other words, there are “competing events” that can occur, which prevent a patient from making it to transplant. When a patient is on the waiting list (or about to register) and wondering how long they will wait, it is important to acknowledge that these competing risks exist.

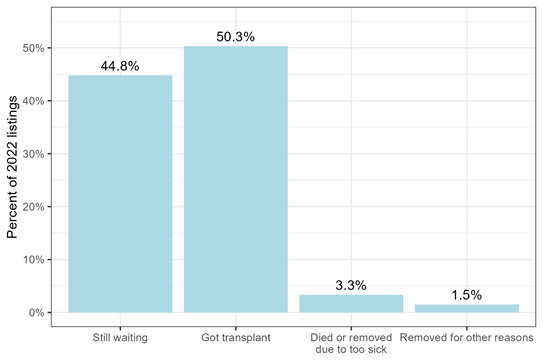

So, the second underlying question someone might be asking is How long does it take for a patient to get a transplant, given that they might die or be removed from the waiting list before transplant?

To answer this second question, we typically focus on the percentage of patients who underwent transplant after some amount of time, compared with the percentage who 1) were still waiting at that time, 2) died or were removed from the waiting list because they were too sick for transplant, or 3) were removed from the waiting list for other reasons. In statistics, these percentages are called “cumulative incidences.”

When the cumulative incidence of transplant is 50%, for example, half of all candidates have undergone transplant. This is another “median waiting time.” Figure 2 shows this median waiting time among adult heart candidates listed in 2010-2022.

Figure 2: Median waiting time for heart transplant among adult candidates, by listing year. The median waiting time is when 50% of candidates have received transplant, while the other 50% are still waiting or have experienced a competing event such as death.

Both Figures 1 and 2 show that the median waiting time for adult heart transplant started to decrease in 2018, with the introduction of a new heart allocation policy that October. But especially pre-2018, the median waiting times for heart candidates that account for competing risks (Figure 2) were much longer than the median waiting times among heart recipients (Figure 1). For example, in 2014, the median waiting time for transplant recipients was 4 months, while the median waiting time for transplant candidates was 14 months. Those are two very different answers to the question “How long did it take to get a heart transplant in 2014?”

Zooming in on 2022, we can explore the cumulative incidence of each possible outcome by the median waiting time, which was 50 days after listing in 2022 (Figure 3). So, 50% of patients listed in 2022 had received a heart transplant after 50 days, while 3% of patients had died or been removed due to being too sick for transplant, 2% of patients had been removed for other reasons, and 45% were still waiting. 50% + 45% + 3% + 2% = 100%, representing all possible waitlist outcomes.

Figure 3: Waitlist outcome after 50 days waiting, for adults listed for heart transplant in 2022.

From the perspective of a patient who is still waiting, cumulative incidences (Figures 2-3) are less misleading than transplant waiting times among those who have undergone transplant (Figure 1) and also more actionable. For example, if a kidney transplant candidate has a high probability of dying before receiving a deceased donor transplant, they should be counseled to pursue living donation.

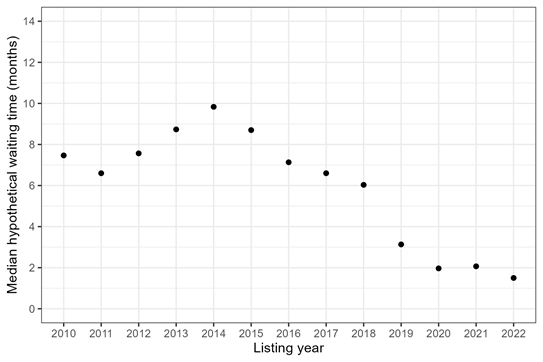

Cumulative incidences can help patients and clinicians understand the real-world probability of transplant and risk of death before transplant. Sometimes, however, we actually want to know the hypothetical waiting time for transplant if a patient could not die and stayed on the waiting list until transplant.

So, the third underlying question someone might be asking is How long would it take for a patient to get a transplant in a world without death?

In statistics, this is called a counterfactual, because it represents a world that does not, in fact, exist. Schools of thought differ about how useful this measure is for clinical decision-making, because it does not represent the “real world.” At the same time, it might be of interest to clinicians who want to know a patient’s underlying allocation priority, isolated from their risk of death, and it allows us to compare transplant access across patients with different underlying risks of death.

This third question is the most difficult for statisticians to answer, because we do not collect data from a hypothetical world without death. We collect data in the real world. And for patients who die before transplant, we simply do not know how long they would have waited for transplant had they lived.

Several statistical methods have been created to deal with this issue. For example, a method called “inverse probability weighting” accounts for the fact that patients who died before transplant might have been sicker than patients who did not die before transplant, and up-weights the patients who lived so they represent both themselves and the patients who died.

Figure 4 applies inverse probability weighting to compute the hypothetical median waiting time for a heart transplant, in a world without death or waitlist removal before transplant, for adults listed in 2010-2022.

Figure 4: Median hypothetical waiting time for heart transplant among adult candidates, by listing year. The median hypothetical waiting time is when 50% of candidates would have received a heart transplant in a world without death or waitlist removal before transplant.

In a hypothetical world without death, the median waiting times for transplant are shorter than in the real world (Figure 2), which makes sense. But, the hypothetical waiting times are still longer than real-world waiting times among transplant recipients (Figure 1). Is this surprising? Maybe. We might guess that the hypothetical waiting times would be similar to real-world recipient waiting times, because both are for people who have avoided the competing risk of death. But this is not the case. Restricting to transplant recipients (and ignoring everyone else who was waiting) still underestimates the waiting time for transplant, even in a hypothetical world without death.

In summary, there are at least three underlying questions that a person might be asking when they say, “How long does it take to get a transplant?” They might want to know 1) how long transplant recipients wait, 2) how long transplant candidates wait, given that they might die before transplant, or 3) how long transplant candidates would wait, if they could not die.

It is important to be precise when formulating any research question. The goal of the research, the target audience, and which decisions we hope to inform must all be considered. We can find meaningful answers to difficult problems, but only when we ask a meaningful question.

__________________________________________________________________________________

Nicholas Wood, PhD, Biostatistician

February 1, 2023

Creating public policy to achieve a desired end is not as easy as you might think. This is especially the case when considering something as complex as allocation policy for organ transplantation. Because there are far more patients awaiting transplant than there are donated organs, allocation policy is written that determines which patients get priority when a donated organ becomes available. Each organ has its own allocation policy. Here we shall discuss a recent change to liver allocation policy intended to reduce geographic disparity in access to liver transplants.

Liver allocation policy has historically prioritized patients primarily using medical urgency and geography. A patient’s medical urgency is quantified by the model for end-stage liver disease (MELD) score, which ranges from 6 to 40. Higher MELD scores correspond to greater medical urgency, and therefore greater priority is given to patients with higher MELD scores. However, this prioritization is not absolute—geography also plays a key role.

Once recovered, a donated liver has a limited time frame to be transplanted. Furthermore, within that time frame it is generally preferable to transplant the liver sooner rather than later. Therefore, greater priority is given to patients whose transplant center is closer to the donor. For many years this was done via the donation service area (DSA).

Figure 1. Donation service areas

Just as the United States can be divided into 50 states, it can be divided into 57 DSAs. (Note: The number of DSAs changes from time to time but was 57 at the time of this writing.) DSA boundaries are interesting to say the least, however, it is outside of our scope to discuss how they came to be. All we need to know is that liver allocation policy has historically balanced medical urgency and geography by first prioritizing patients at transplant centers in the same DSA as the donor, and then, within that DSA, prioritizing patients with the highest MELD score.1

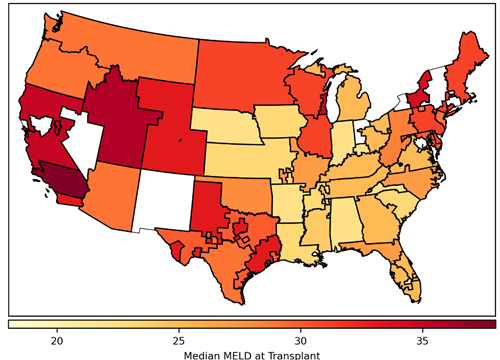

Over time, the transplantation community noticed that a patient’s access to transplant depended largely on which DSA his transplant center was in. We can show this by determining the median MELD at transplant for each DSA.

Figure 2. Median model for end-stage liver disease (MELD) score at transplant by donation service area. Includes deceased donor liver transplants occurring in 2014, excluding recipients who do not have a MELD score (ie, recipients younger than 12 years and status 1A/1B recipients). Donation service areas shaded white had no liver transplants during this time.

Median MELD at transplant can be interpreted as how sick a patient has to become to get a liver transplant. In 2014, the median MELD at transplant for recipients in the Alabama DSA was 22, whereas in one of the California DSAs it was 38. In other words, if you needed to get a liver transplant and were listed in California, you would need to be much sicker than if you were listed in Alabama. By calculating the variance in median MELD at transplant across all of the DSAs, we get a measure of geographic disparity. In 2014, the variance in median MELD at transplant was 20. While it’s difficult to interpret this measure of geographic disparity, it suffices to say that higher variance corresponds to greater geographic disparity. Therefore, the transplantation community set out to design a new allocation policy that would reduce geographic disparity by reducing the variance in median MELD at transplant.

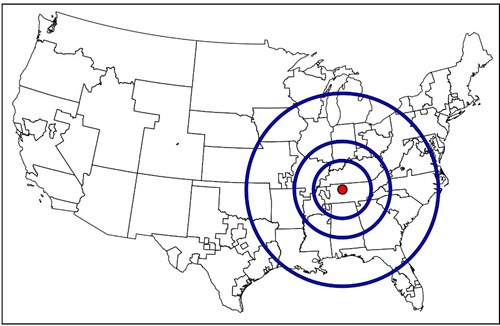

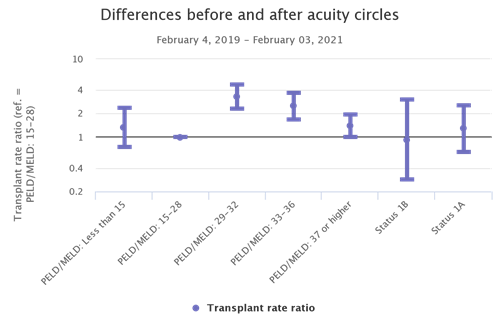

The new allocation policy that came out of this lengthy endeavor, the acuity circles policy, was implemented in early 2020. The acuity circles policy replaced the DSA as the geographic unit of allocation by using three concentric circles around the donor hospital. These circles were 150, 250, and 500 nautical miles in radius.

Figure 3. Acuity circles shown around an example donor hospital in Tennessee.

Acuity circles balances medical urgency and geography by first prioritizing patients with the highest MELD scores of 37 to 40, and then, within that range of MELD scores, prioritizing candidates first within the 150–nautical-mile circle, then the 250–nautical-mile circle, and then the 500–nautical-mile circle. This is then repeated for the lower MELD scores as well.2 Simply based on how much larger the circles are relative to the DSAs, intuition suggests that the acuity circles policy should reduce geographic disparity. There were also simulation studies performed by the Scientific Registry of Transplant Recipients that agreed with this intuition.

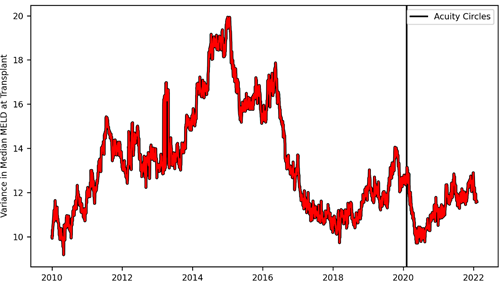

Acuity circles has now been in effect for three years and we can ask whether it achieved the desired goal of reducing geographic disparity. In the year preceding its implementation, the variance in median MELD at transplant was about 12; in the year after its implementation, the variance in median MELD at transplant was slightly lower, at about 11. Take a moment and look at these numbers again. Do you notice anything strange? In the years immediately surrounding implementation of the acuity circles policy, the variance in median MELD at transplant was between 11 and 12, but in 2014 it was nearly twice as high—at 20. So what happened?

It’s helpful to look at how variance in median MELD at transplant has changed over time to see the bigger picture. On each day from 2010 through 2022, we can calculate the variance in median MELD at transplant in the previous year.

Figure 4. Variance in median model for end-stage liver disease (MELD) at transplant over time. On each day, variance in median MELD at transplant was calculated based on deceased donor liver transplants in the previous year, excluding recipients who do not have a MELD score (ie, recipients younger than 12 years and status 1A/1B recipients). The vertical line indicates the date the acuity circles policy was implemented.

After acuity circles was implemented we can see the variance in median MELD at transplant quickly drop and then shortly thereafter rebound to roughly where it was prior to the policy change. At best, acuity circles minimally decreased geographic disparity and, at worst, it had no lasting impact on it at all. However, from approximately 2014 to 2018, the variance in median MELD at transplant plummeted from 20 to 10. What happened that caused geographic disparity to be cut in half? Unfortunately, I do not know. But what I do know is that it happened naturally, on its own, without the need for any committee, governing body, or policy to bring it about.3

I’d like to propose three lessons we can draw from this for policy-making in organ transplantation. First, anyone who participates in creating such policy (myself included) should approach the task with humility. For something as complex as organ allocation, with its many moving parts, it will never be entirely clear beforehand whether a proposed policy will achieve its desired end, even when everything seems to suggest it will. Second, the assumptions that underlie allocation simulations must be well understood and clearly explained, such that their results can be accurately interpreted. Otherwise, simulation results will mislead us instead of guide us.4 Finally, when confronting some problem in transplantation (eg, geographic disparity), perhaps it is worth asking whether policy is really what is needed to solve it.

Footnotes

__________________________________________________________________________________

Kirsten Johansen, MD, CDRG Co-Director

December 19, 2022

The United States Renal Data System 2022 Annual Data Report (ADR) was posted on October 31, 2022. This year’s report contains data from medical claims through 2020 (and for some end-stage renal disease [ESRD]–related metrics through the first half of 2021). As such, this is the first year in which the wide-ranging effects of the COVID-19 pandemic on the chronic kidney disease (CKD) and ESRD populations can be placed into the full context of the years that preceded its onset. Although we presented early views into the impact of the COVID-19 pandemic on the ESRD population in the 2020 ADR and expanded these analyses to include examination of COVID-19 diagnoses and outcomes in the CKD population in the 2021 ADR, the full magnitude of the direct and indirect effects of the pandemic on these populations comes into sharp focus throughout this year’s report.

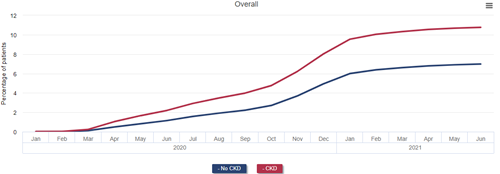

The direct effects of COVID-19 can be measured by examining patterns of patient testing, hospitalization, and mortality. Over 10% of patients with CKD, 13% of patients with a kidney transplant, and 20% of patients on dialysis in January 2020 were diagnosed with COVID-19 by the end of June 2021, rates that were approximately 50%, 100%, and 200% higher than that of Medicare beneficiaries without CKD, without a kidney transplant, and not on dialysis, respectively.

Cumulative incidence of diagnosed COVID-19 among Medicare beneficiaries by CKD and ESRD status, January 2020 - June 2021

Data Source: 2022 United States Renal Data System Annual Data Report

The incidence of hospitalization after COVID-19 diagnosis among patients with CKD was more than double that of those without CKD in 2020; patients receiving dialysis consistently had hospitalization rates higher still than those with earlier stages of CKD.

Monthly incidence of COVID-19 hospitalization among Medicare beneficiaries by CKD and ESRD status, January 2020 - June 2021

Data Source: 2022 United States Renal Data System Annual Data Report

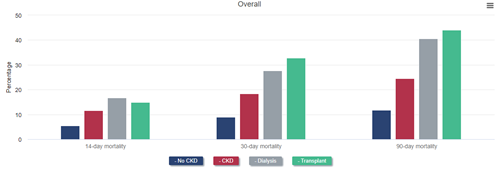

Mortality at 14, 30, and 90 days after diagnosis of COVID-19 was more than twice as high among beneficiaries with CKD as among those without. Nearly one-quarter of patients with CKD who were diagnosed with COVID-19 died within 90 days. Mortality after COVID-19 diagnosis was even higher for patients with ESRD, reaching 40.5% for patients on dialysis and 44.1% among kidney transplant recipients 90 days after diagnosis.

All-cause mortality after COVID-19 diagnosis in Medicare beneficiaries by CKD and ESRD status, January 2020 - June 2021

Data Source: 2022 United States Renal Data System Annual Data Report

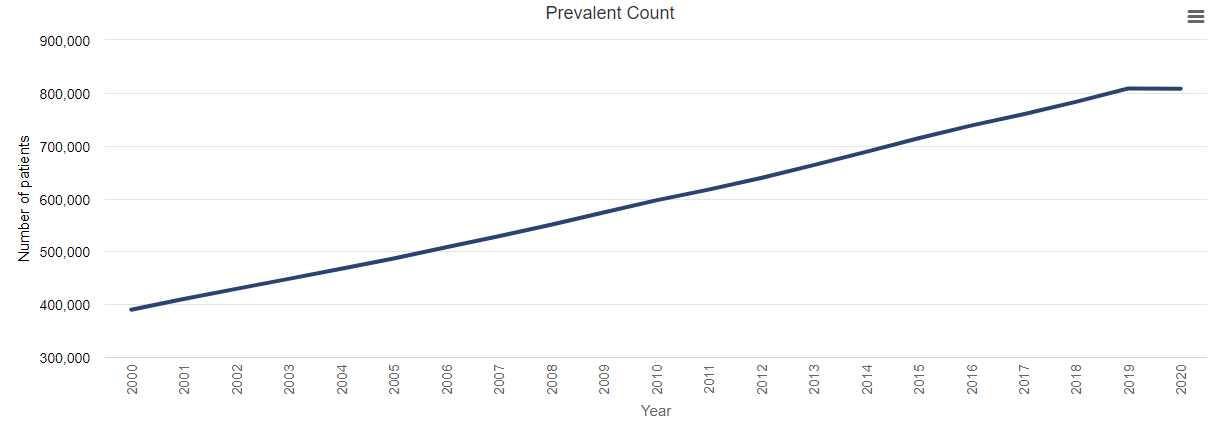

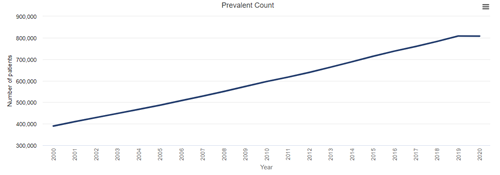

The ultimate result of the higher incidence of COVID-19 and higher mortality after diagnosis of COVID-19 among patients with CKD (including ESRD) was the unprecedented shrinking of the prevalence of diagnosed CKD and ESRD in 2020. As a result of fewer patients reaching diagnosed ESRD and the increase in mortality rate among patients with ESRD attributable to the pandemic and its effects, the rate of prevalent ESRD decreased by almost 2% in 2020.

Prevalence of ESRD, 2000-2020

Data Source: 2022 United States Renal Data System Annual Data Report

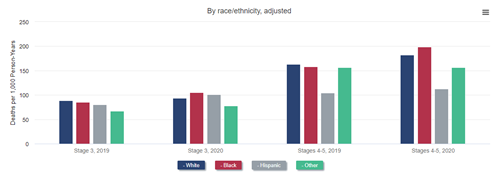

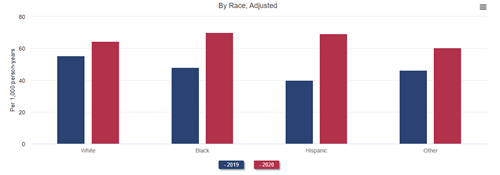

Mortality after COVID-19 was higher among Black and Hispanic Medicare beneficiaries with CKD than among White beneficiaries with CKD. As a direct result of this higher COVID-19–related mortality and, possibly, more limited access to medical care unrelated to COVID-19, mortality increased more among Black than among White beneficiaries with stage 4 or 5 CKD in 2020. This resulted in a reversal of the longstanding observation of lower mortality among Black patients with CKD. In other words, whereas Black beneficiaries with CKD had lower mortality than White ones with CKD in 2019 and prior years, they had higher mortality than their White counterparts in 2020. A similar reversal of the Black-White mortality difference occurred in transplant recipients: mortality was higher among White recipients in 2019 but among Black recipients in 2020. The mortality difference did not reverse among patients treated with dialysis, but it did narrow, from 43% higher mortality among White patients in 2019 to only 30% higher mortality in 2020.

All-cause mortality rate in older adults, by CKD stage and demographics, 2019 and 2020

Data Source: 2022 United States Renal Data System Annual Data Report

All-cause mortality in adult ESRD patients, by demographics and treatment modality, 2019 and 2020

Data Source: 2022 United States Renal Data System Annual Data Report

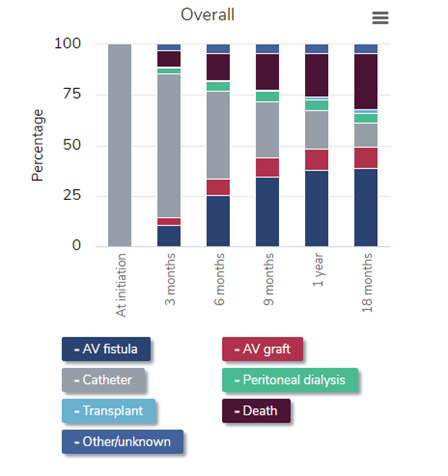

Other direct and indirect effects of COVID-19 and the changes in availability and delivery of health care that occurred in 2020 can be seen throughout the ADR and in many metrics typically tracked in the CKD population, including the ESRD population. Some particularly alarming developments among patients with ESRD follow:

The United States Renal Data System will continue to monitor these trends going forward to determine whether they rebound to prepandemic levels or continue to be worse than prior to 2020.

__________________________________________________________________________________

Cory Schaffhausen, PhD, Human Centered Design Engineer

Jon Snyder, PhD, Director, Scientific Registry of Transplant Recipients

October 6, 2022

Monitoring the organ transplantation system

The organ transplantation system in the United States is often considered a model for rigorous monitoring of health outcomes in a medical system. The Scientific Registry of Transplant Recipients (SRTR) is a national registry of data on organ transplant candidates, recipients, and donors housed within the Chronic Disease Research Group (CDRG) of the Hennepin Healthcare Research Institute (HHRI). SRTR plays a key role in monitoring the performance of the transplantation system, including the transplant programs and organ procurement organizations. SRTR analyzes and publicly publishes data on organ transplants on the SRTR website. Historically, these data reports have been technical and primarily used by professionals and regulators.

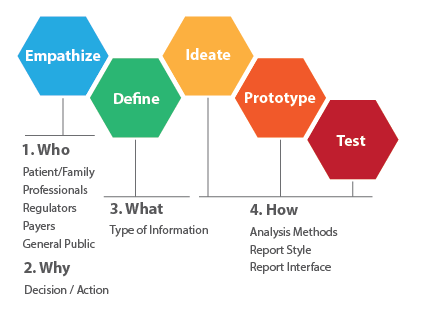

In recent years, SRTR has targeted several initiatives to provide information to patients that can be more readily understood, used to navigate the transplant journey, and inform key health care decisions. The human-centered design process used includes iterative steps to understand and address patient needs. SRTR has two parallel focus areas that combine to provide a roadmap for making the SRTR data registry a resource for patients. One focus area is patient, family, and donor engagement to better understand information that is important to these groups. The other focus area is the design of a website with improved navigation and data presentations to allow users to easily find and interpret the information that is important.

What information is important to patients, family members, and donors?

In July 2022, SRTR conducted the People Driven Transplant Metrics Consensus Conference. The conference was a multidisciplinary meeting that included patients, professionals, regulators, and other transplant stakeholders. Patient engagement for the meeting was an important step to work toward a better transplantation system and recognize that patients are at the core of the transplantation field. Patients were directly involved in the conference planning, and the meeting was informed by patient, family member, and donor feedback from a series of virtual feedback sessions and an online public comment forum.

The conference dedicated a series of discussions to identifying information that is of importance to patients, family members, caregivers, and donors. The scope of feedback was intentionally broad to include not only requests for information that can currently be met using existing SRTR data but also requests for information that may not currently be captured within the system. The discussions included a final step to identify the highest priorities. The feedback from all groups was compiled and synthesized into a comprehensive list to inform the development of future online resources.

How can patients, families, and donors easily navigate to find information they seek?

The patients who are seeking a transplant are a heterogeneous group, and the transplant journey is often a complex, multiyear process. The information that is important for a decision may be specific to the needs of each person, including their location, medical characteristics, and point along the transplant journey. The SRTR database includes an expansive scope of data that may be relevant. While the conference identified important information, it did not specifically address potential methods to help patients navigate and identify the information they are seeking.

In parallel to the conference, SRTR engaged with a professional design firm to begin work on a future website with patient needs factored in from the ground up. The process was iterative and based on continuous feedback to test and improve prototypes. The concept design phase included three cycles that began with seven potential website concepts and gradually converged into a preferred concept, with mockups of primary homepages and multiple patient resource pages and interactive data tools. Ongoing development will continue to integrate patient feedback into the design process.

SRTR looks forward to continuing this focus on the needs of consumers of our nation’s transplantation system, recognizing that the patients, their caregivers, living donors, and deceased donor family members are the consumers whom the entire system is designed to serve. The Agency for Healthcare Research and Quality (AHRQ) recognized the ongoing need to continue to improve health care report cards to improve information for patients, noting that “Finding ways to make public reports more relevant and useful to consumers is part of an overall strategy to improve health care.” In addition, a recent report by the National Academies of Sciences, Engineering, and Medicine made numerous recommendations to improve our nation’s transplantation system and noted “there is an opportunity to refocus the organ transplantation system around the patient experience of needing and seeking an organ transplant.”

To further this process of refocusing on the patient experience, SRTR welcomes more viewpoints. If you are a patient, caregiver, living donor, or deceased donor family member who would like to get involved, please email SRTR at SRTR@SRTR.org.

__________________________________________________________________________________

Nicholas Roetker, PhD, MS, Epidemiologist

April 1, 2022

Time-to-event analyses can provide useful information to clinicians and their patients for making informed medical decisions. Patients often ask physicians questions such as:

Physicians can respond by counseling patients about average (mean) times for various outcomes, but also commonly find it useful to speak in terms of cumulative incidence. Cumulative incidence, a measure commonly estimated using time-to-event analysis, describes the risk of an event (typically one adverse in nature) occurring before a specific time point. Given their intuitive appeal, cumulative incidence estimates are a cornerstone of United States Renal Data System (USRDS) analyses, appearing often as figures in the USRDS Annual Data Report.

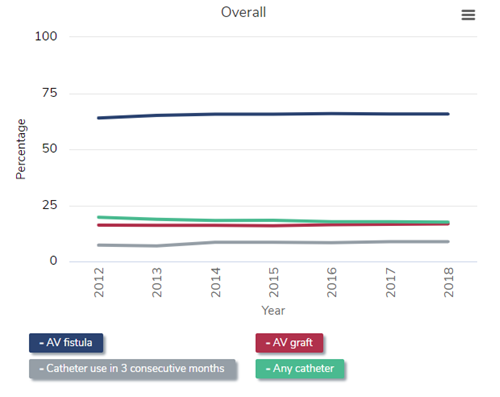

As an example, patients with end-stage kidney disease (ESKD) requiring maintenance hemodialysis often use a graft or fistula for vascular access. Even though they are considered “permanent” accesses, fistulas and grafts usually require interventions to maintain their patency over time. Some accesses may fail entirely, requiring abandonment and placement of a completely new permanent access, which is an adverse event known as “loss of secondary patency.”

Upon first successful use of a graft or fistula as access for hemodialysis, patients or their health care providers may wonder what the chances are that the access will lose secondary patency (ie, require final abandonment) within the first 1 or 2 years of use. This type of question can be informed directly with a cumulative incidence analysis.

However, when estimating or interpreting cumulative incidence, it is important to consider the impact of competing risk events, which are events that a patient may experience that would prevent the occurrence of the adverse event under study. Death, in particular, is an important competing risk to consider in most time-to-event analyses involving populations with high rates of morbidity and mortality, such as the ESKD population. As one might imagine, this is a sensitive issue for physicians and other health care providers to discuss with patients, who may think answers to reasonable clinical questions are easy to provide. When patients wonder how long a fistula is likely to last, they may implicitly assume they will outlive the access; they may not fully consider the possibility that death could occur before the access is lost.

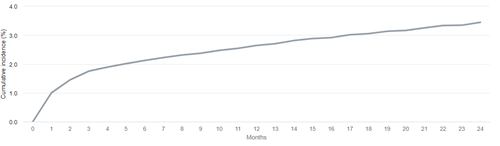

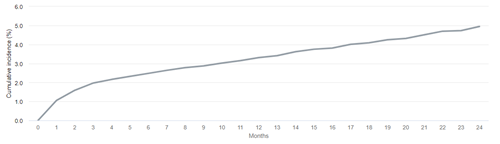

Whether or not death is accounted for as a competing risk in the analysis will lead to different interpretations of the resulting cumulative incidence estimate, with potentially important implications for counseling patients. Let us consider an example estimating the 2-year cumulative incidence of loss of secondary patency among patients initiating hemodialysis with a functioning fistula in 2016-2018. In the analysis explicitly accounting for death as a competing risk, we see that there is an estimated 3.4% risk of experiencing secondary patency loss, before dying, by 2 years after first use of the access.

Cumulative incidence of loss of secondary access patency after HD initiation with a fistula in 2016-2018, accounting for competing risks

Data Source: 2021 United States Renal Data System Annual Data Report

Conversely, in the analysis that does not account for death as a competing risk, we see that corresponding 2-year risk of loss of secondary patency is estimated to be slightly higher (5.0%).

Cumulative incidence of loss of secondary access patency after HD initiation with a fistula in 2016-2018, not accounting for competing risks

Data Source: 2021 United States Renal Data System Annual Data Report

In this analysis, death is treated as censoring in the same way as any other, more “conventional” censoring event (eg, administrative end of follow-up). Time-to-event analyses require making the assumption that the risk of experiencing the event of interest is the same, on average, for patients who remain in the study and patients who are censored, a concept known as noninformative censoring. However, assuming that patients who die share the same risk of going on to lose secondary patency as those who remain alive is, frankly, absurd—those who die can never experience any future event!

As such, for the analysis that does not treat death as a competing risk, one must interpret the cumulative incidence as the risk of secondary patency loss in a hypothetical world in which death can be prevented from occurring. In other words, we must assume that for the patients who died, had we only been able to prevent death and follow them forward for a longer time, we would have observed that they had the same underlying risk of patency loss as those who did not die. In general, the reasonableness of this assumption is going to depend on the specific clinical context of the time-to-event analysis.

Many epidemiologists would advocate for explicit accounting of competing risks when estimating cumulative incidence. This may be particularly true in a disease state such as ESKD, where patients face complications related to both their treatment choices and their general health. For a patient with a short predicted remaining lifespan, electing to receive an “inferior” vascular access (ie, a graft) may represent the best option if the placement of the access is less invasive and it will be ready for use in dialysis more quickly. In this scenario, providing cumulative incidence estimates that account for competing risks may provide the best information for weighing risks and benefits of different treatment options. Thus, in many situations, clinicians should learn to sensitively employ cumulative incidence calculations that incorporate the idea of competing risks—especially death—when speaking to patients about what the future might hold for their health.

__________________________________________________________________________________

Jonathan Miller, PhD, MPH, Biostatistician

February 1, 2022

In the December 15, 2021, Chronic Disease Research Group (CDRG) blog post, Dr. James Wetmore asked the following question and follow-up questions: “’What happened to all these patients with advanced CKD [chronic kidney disease] approaching the need for dialysis and kidney transplantation?’ Will there be a make-up ‘surge’ in incident dialysis patients in the future? Or, more ominously, did many people with stage 5 CKD die before they ever had a chance to initiate dialysis (or receive a kidney transplant), due either to COVID-19 or to non–COVID-19 causes that became more difficult to diagnose and treat as the pandemic stressed the US health care system?”

Dr. Wetmore was discussing United States Renal Data System (USRDS) data, but this question is also important for CDRG’s other registry contract, the Scientific Registry of Transplant Recipients (SRTR). Patients receiving dialysis are generally also candidates for, and future recipients of, kidney transplants. Additionally, there is concern that COVID-19 will have lasting impacts on patients at risk, or receiving other solid organ transplants such as heart, lung, liver, pancreas, kidney, intestine, and multiorgan (all of which SRTR currently monitors). Given the large overlap in the dialysis and kidney transplant candidate populations, SRTR has published about similar increases in mortality during the pandemic for kidney transplant candidates and shown that the number of candidates on the waiting list remains lowered.

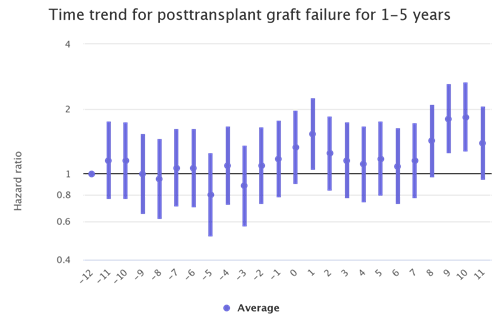

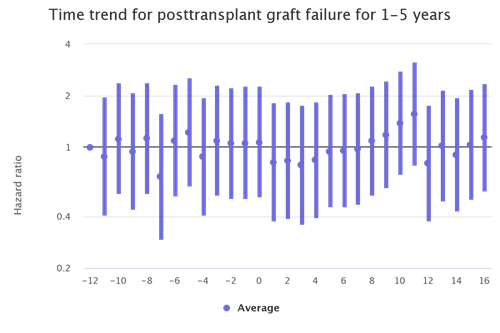

A source for COVID-19 impact updates regarding transplant candidate and recipient populations is the SRTR COVID-19 app. Some trends shown on this app identify increases in both waitlist mortality and graft failure in the kidney candidate and recipient populations during the first 2 months of the pandemic, and during the winter 2020-2021 surge as well. (Figure 1)

Figure 1: Month trends in kidney recipient graft failure

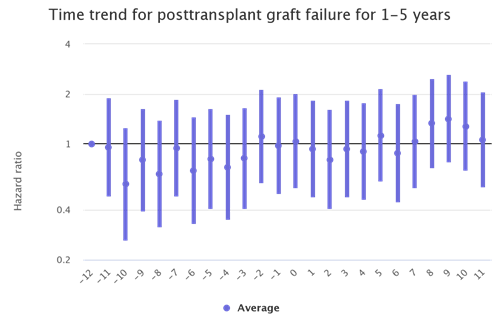

The kidney transplant populations show the most dramatic increases in waitlist mortality and graft failure during the waves of the pandemic. There are also increases during the waves among the liver and lung transplant populations although not as stark. (Figures 2 and 3)

Figure 2: Month trends in lung recipient graft failure

Figure 3: Month trends in liver recipient graft failure

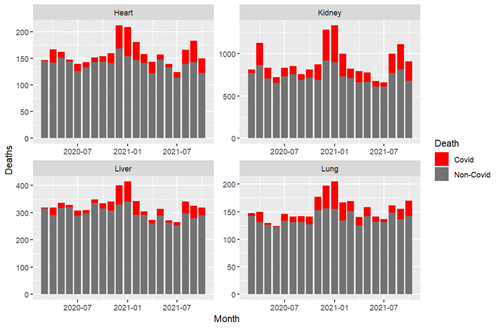

As with the USRDS data, it is possible with SRTR data to identify deaths by transplant center for which COVID-19 is listed as the cause. By the winter 2020-2021 pandemic surge, there were clear surges in deaths attributable to COVID-19 among heart, lung, liver and kidney recipients. This alarming pattern reemerged during the Delta variant surge in fall 2021 despite vaccination being widely available. (Figure 4)

Figure 4: Deaths attributable to COVID-19 among transplant recipients

Much like with pretransplant and dialysis populations, there are continuing impacts of COVID-19 on transplant recipients. While we didn’t know in March 2020 that we would still be monitoring COVID-19’s impact on transplant recipients 2 years later, SRTR is continuing to track outcomes in this higher risk population.

For more information on SRTR’s COVID-19 research efforts, visit the SRTR website.

__________________________________________________________________________________

James Wetmore, MD, MS, Medical Director for Nephrology Research

December 15, 2021

Amazingly, an entire year has passed since the Chronic Disease Research Group (CDRG) last blogged about COVID-19 and kidney disease. A year later, it’s fair to ask, “What have we learned?” The obvious related questions are, “What knowledge gaps exist, and what more do we need to learn?”

As it turns out, we’ve learned quite a bit. Myriad contributions about the association between COVID-19 and outcomes in patients with kidney disease have been made by investigators around the world, and, indeed, some of the work has been done right here at CDRG. Glance at the table of contents of any kidney disease journal over the past 2 years, and you’ll be astounded to see how prominently the pandemic and its implications have been featured. It’s no exaggeration to say that these research efforts are unprecedented in the history of nephrology.

For this blog, let’s examine several questions: (i) What impact did the pandemic have on mortality in patients receiving dialysis? (ii) What happened to the prevalent dialysis census as a result of the pandemic? and (iii) What happened to patients with late-stage chronic kidney disease (CKD) who were facing transition to dialysis (the incident dialysis census)? Then we’ll speculate on some key epidemiologic questions that need answering.

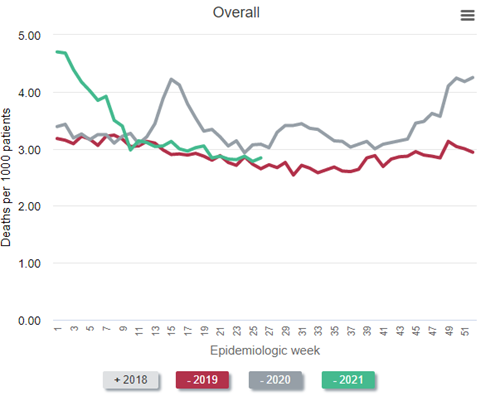

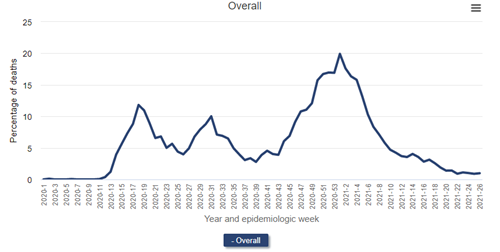

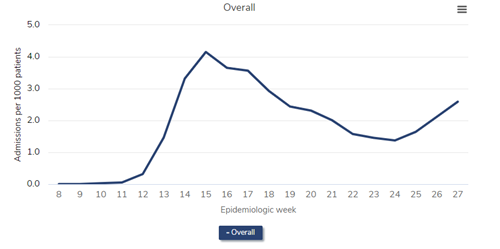

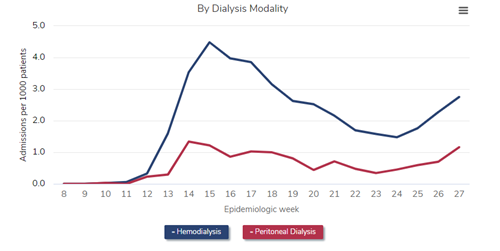

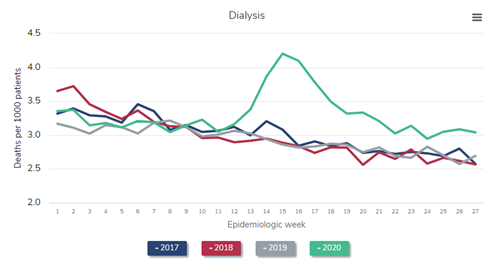

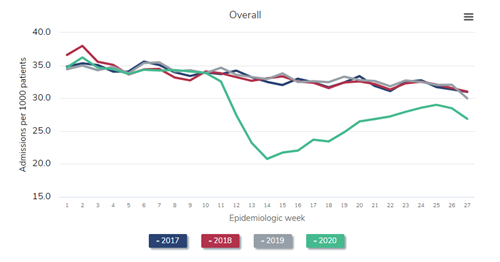

First, let’s look at the all-cause mortality in patients receiving dialysis. (I’ve selected a version of the figure that specifically shows dialysis patients.)

All-cause mortality rate among patients with ESRD, 2018-2021

Data Source: 2020 United States Renal Data System Annual Data Report

In the figure above, which is Figure 13.12a of the end-stage renal disease (ESRD) volume in the recently-released 2021 United States Renal Data System (USRDS) Annual Data Report (ADR), the red line represents all-cause mortality (measured as deaths per 1,000 persons) in 2019. Consider this red line as a prepandemic baseline pattern of weekly death rates. (Note that deaths tend to drop in the summer months and peak in December and January.) Then inspect the gray line—2020. Note the tremendous spike in weekly death rate during the first wave of the pandemic, “epidemiologic weeks” 13-23 or so (representing the last third of March to the beginning of June 2020). During week 15 (early April), the weekly death rate jumped by about 45%! After another spike during epidemiologic weeks 29-36 or so, a precipitous increase began at about week 45, or early November. The winter of 2020-2021 was calamitous: The weekly death rate per 1,000 patients receiving dialysis reached 4.7 in epidemiologic week 1 of 2021—an astounding increase of nearly 50% relative to the same period in 2019.

Were these deaths among dialysis patients due to COVID-19? The circumstantial evidence is overwhelming—what else could have happened in 2020 and 2021, compared with 2019, to have caused this? What else could have exhibited a temporal pattern that mimicked the pattern of death in the overall US population? However, it turns out we need not rely on circumstantial evidence—we have direct evidence.

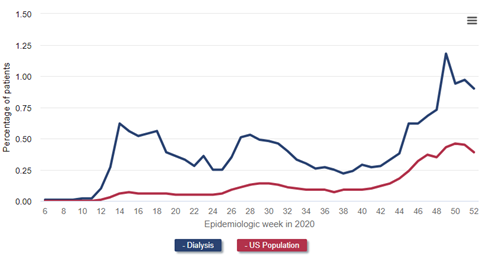

Percentage of deaths due primarily to COVID-19 among patients undergoing dialysis, 2020-2021

Data Source: 2020 United States Renal Data System Annual Data Report

The figure above (Figure 13.13 from the 2021 USRDS ADR) shows deaths attributed primarily to COVID-19 among patients receiving dialysis. The source is the Medicare-mandated Form 2746, or the ESRD Death Notification Form, required for all deaths of persons with end-stage kidney disease (regardless of whether Medicare was the payer). During the initial wave of the pandemic, nearly 12.0% of deaths among patients receiving dialysis were officially attributed to COVID-19 at epidemiologic week 18. During the pandemic surge in the winter of 2020-2021, fully 1 in 5 deaths were attributed to COVID-19 (epidemiologic week 1 of 2021)!

Inspect the weekly incidence of diagnoses of COVID-19 in patients receiving dialysis and the general population (the latter derived from Centers for Disease Control data) in the following figure, which is Figure 13.4 from the 2021 USRDS ADR.

Data Source: 2020 United States Renal Data System Annual Data Report

One can easily see how much more common COVID-19 diagnoses were among patients receiving dialysis, and how the temporal patterns of COVID-19 diagnoses in the general population were greatly exaggerated in the dialysis population.

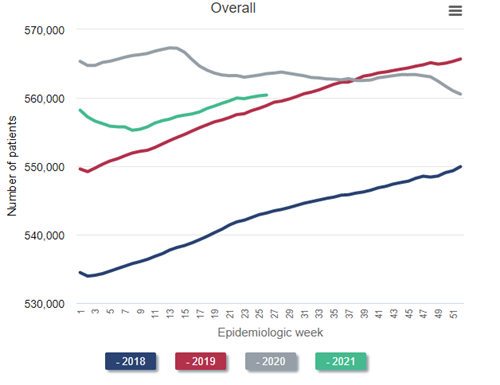

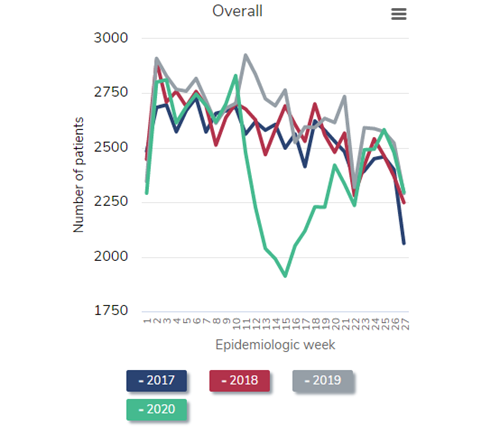

Next, let’s take a look at the prevalent dialysis census. The following figure is a reproduction of Figure 13.11 from the just-released 2021 USRDS ADR. Again, I’ve selected a version of the figure that shows only the dialysis patient census (that is, not including patients with a kidney transplant).

Number of prevalent ESRD patients, 2018-2021

Data Source: 2020 United States Renal Data System Annual Data Report

Look at the blue line, representing the steady historical increase in the census of patients receiving dialysis throughout 2018. Naturally, the left side of the red line (2019) picks up where the right side of the blue line (2018) leaves off. Again, observe the steady growth in the dialysis census throughout 2019—exactly what would have been predicted. But then inspect the gray line (2020). Instead of a steady increase in the dialysis census throughout 2020, as would have been predicted, the census decreases during the first wave in the pandemic (around epidemiologic week 15 of 2020). This census drop is the first that has ever occurred since these data have been tracked beginning in the early 1980s. The calamitous winter of 2020-2021 resulted in a nadir in the dialysis census to 555,264 in epidemiologic week 8 of 2021 (final full week of February). This represents a decrease of over 2% from the beginning of the pandemic, but in reality represents a “deficit” of nearly 4% after one considers what the projected growth of the prevalent dialysis census “should have” been, based on historical trends, had the pandemic not occurred.

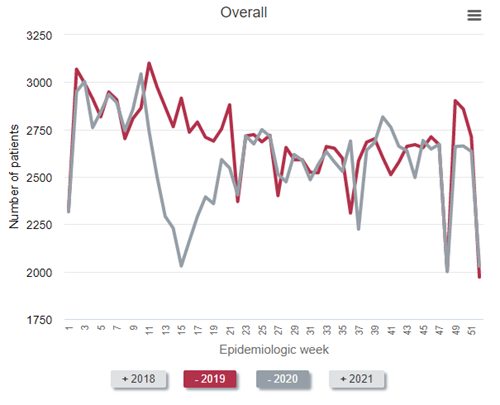

Not all of the decrease in the prevalent census can be attributed to an increase in weekly death rates among prevalent patients receiving dialysis. Some of the “dialysis deficit” is attributable to fewer patients with advanced CKD being declared as having end-stage kidney disease by virtue of initiating dialysis. Observe the findings in the figure below.

To best make the point, it is easiest to compare 2019 (the red line) to 2020 (the gray line). As can be seen, far fewer patients with newly declared end-stage kidney disease initiated dialysis from epidemiology week 11 to about week 22 in 2020—the first wave of the pandemic in the United States—compared with 2019. In fact, during epidemiologic week 15 (early April 2020), the number who initiated dialysis was about 30% below historical norms. “Only” about 2,030 individuals initiated dialysis that week—a weekly total that has not been seen for nearly a decade.

A major question that remains is “What happened to all these patients with advanced CKD approaching the need for dialysis and kidney transplantation?” Will there be a make-up “surge” in incident dialysis patients in the future? Or, more ominously, did many people with stage 5 CKD die before they ever had a chance to initiate dialysis (or receive a kidney transplant), due either to COVID-19 or to non–COVID-19 causes that became more difficult to diagnose and treat as the pandemic stressed the US health care system? The long-term effects of the pandemic on the population with kidney disease, including those with (or transitioning to) end-stage kidney disease, may be the next great research frontier in kidney disease epidemiology.

As an acknowledgement, our former CDRG colleague Eric Weinhandl, PhD, MS, led most of our COVID-19–related analyses in his capacity with the USRDS. Thanks Eric, and best of luck in your new endeavors.

__________________________________________________________________________________

Eric Weinhandl, PhD, MS, Senior Epidemiologist

September 9, 2021

Much has been written about the potential of incremental hemodialysis to improve outcomes in the early months of dialysis treatment, but what has been published can be reasonably characterized as a constellation of expert opinions and observational data analyses.

But now we have a randomized controlled trial. In a new article in Kidney International, Vilar and colleagues describe a randomized controlled feasibility trial of 55 incident hemodialysis patients in four centers in the United Kingdom. Let’s review the details.

Inclusion and exclusion criteria

Trial subjects were adults enrolled within 3 months after hemodialysis initiation. Notably, subjects were required to have a residual renal urea clearance at least 3 mL/min/1.73 m2. Patients expected to require high-volume ultrafiltration were excluded.

Intervention

Subjects randomly assigned to standard care received hemodialysis during three 3.5- to 4-hour sessions each week. Minimally adequate dialytic urea clearance was defined by weekly standardized Kt/V of at least 2.0.

Subjects randomly assigned to incremental hemodialysis received two 3.5- to 4-hour treatments per week. Minimally adequate urea clearance was likewise defined by weekly standardized Kt/V of at least 2.0, but both residual renal and dialytic urea clearance contributed to the calculation of total clearance. Notably, the trial permitted more frequent hemodialysis to achieve the urea clearance target and prevent volume overload and hyperkalemia.

Outcomes

The primary clinical outcomes were the rate of change in residual kidney function and the incidence of serious adverse events, including death, major cardiovascular events, and hospitalization for volume overload, hyperkalemia, lower respiratory tract infections, and vascular access complications. Secondary clinical outcomes included the proportion of patients with residual renal urea clearance of at least 2 or 3 mL/min/1.73 m2 or recovery of kidney function and quality-of-life scores. The usual panel of biochemical parameters, blood pressure, and medication use was recorded, as were healthcare provider costs.

Now let’s look at the results of this trial.

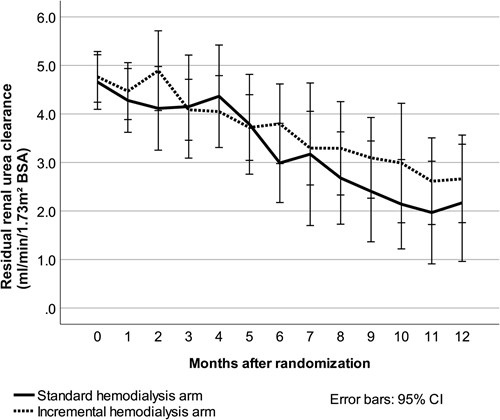

Residual kidney function

Slopes of both residual renal urea clearance and estimated glomerular filtration rate during 12 months of follow-up were statistically similar in the two treatment groups, although both slopes were less steep with incremental hemodialysis. The slope of residual renal urea clearance, adjusted for body surface area, is shown in the figure below.

After 6 months, 92% of patients initially treated with incremental hemodialysis had residual renal urea clearance of at least 2 mL/min/1.73 m2, whereas only 75% of patients initially treated with conventional hemodialysis had clearance at that level. However, the difference was not statistically significant. With a threshold of residual renal urea clearance of at least 3 mL/min/1.73 m2, corresponding statistics were 56% with both incremental and conventional hemodialysis. Unsurprisingly, this difference also lacked statistical significance.

Serious adverse events

Remarkably, the rate of serious adverse events probably or possibly related to dialysis was only 0.9 events per patient-year with incremental hemodialysis but 1.9 events per patient-year with conventional hemodialysis. This difference was statistically significant (P=0.007).

Biochemistry

After 12 months, there were subtle differences between the two groups. Serum phosphorus increased in patients on incremental hemodialysis but not in patients on conventional hemodialysis. Meanwhile, phosphate binder dose increased to a greater extent with incremental hemodialysis than with conventional hemodialysis. In addition, serum bicarbonate decreased with incremental hemodialysis but increased modestly with conventional hemodialysis.

Between months 1 and 12 of follow-up, extracellular water increased 1.8 L in patients on incremental hemodialysis but only 0.8 L in patients on conventional hemodialysis. Although pre- and post-dialysis blood pressure changes seemed similar with both treatments, the number of antihypertensive medications per patient increased by 0.8 agents with incremental hemodialysis but only 0.1 with conventional hemodialysis.

Costs

Total costs were 19,875 British pounds with incremental hemodialysis but 26,125 British pounds with conventional hemodialysis. Costs of transport, hemodialysis, and adverse events were all lower with incremental hemodialysis.

Analysis

This was a small but provocative trial. We are seeing a mix of positive and negative signals with incremental hemodialysis. The balance of the data suggests modestly improved preservation of residual kidney function with incremental hemodialysis, although the benefit is statistically tenuous and might not be replicated in a larger randomized controlled trial. The data also show that the incidence of serious adverse events possibly or probably related to dialysis was lower with incremental hemodialysis.

On the other hand, the predictable effects of less frequent hemodialysis also seem apparent: lower serum bicarbonate, higher serum phosphorus, and greater use of both phosphate binders and antihypertensive medications. One could argue that the data on phosphorus and phosphate binders are inconsequential, considering the uncertainty about the efficacy of hyperphosphatemia treatment. Nonetheless, increasing use of antihypertensive medications and greater gains in extracellular water with incremental hemodialysis suggest to me that volume control deteriorates over 12 months of treatment. Unfortunately, we do not know how many patients were switched from two to three sessions per week, so whether proactive adjustment of the frequency can avert gradually worsening volume overload is unclear.

What is clear is that incremental hemodialysis lowers costs by nearly 25% relative to conventional hemodialysis. Much of this cost reduction can be traced to hemodialysis itself.

We need to keep assessing this strategy, preferably with larger randomized controlled trials and, eventually, with trials of US patients. Incremental hemodialysis obviously reduces healthcare spending and, frankly, reductions might be larger in the United States, considering the high cost of hospitalization. Incremental hemodialysis might better preserve residual kidney function, although the limited sample size of this feasibility trial offers weak evidence in support of this hypothesis. Incremental hemodialysis might also increase the risk of inadequate solute clearance and ultrafiltration, absent both close monitoring and proactive titration of hemodialysis frequency.

At this early time, the question foremost on my mind is this: does incremental hemodialysis provide short-term gains at the expense of long-term losses? Preservation of residual kidney function is clearly important, but we must preserve enough of that function to outweigh the risk of compromising cardiovascular health later in the course of dialysis.

__________________________________________________________________________________

Allyson Hart, MD, MS, Senior Staff for Patient and Family Affairs

August 4, 2021

I fell in love with research when I was studying epidemiology as a nephrology research fellow. I felt the same ripple of excitement building statistical models as I had years before studying the biochemistry that led me into medicine and, ultimately, to nephrology (yes, I am aware that I am an uber nerd….perhaps a topic for another blog). I also loved the idea of contributing to knowledge that might help patients.